[pdfviewer width="100%" height="849px" beta="true/false"]https://mobomo.s3.amazonaws.com/uploads/2018/08/DrupalGovCon-The-Human-Side-of-DevOps.pdf[/pdfviewer]

Transitioning from Agile to DevOps

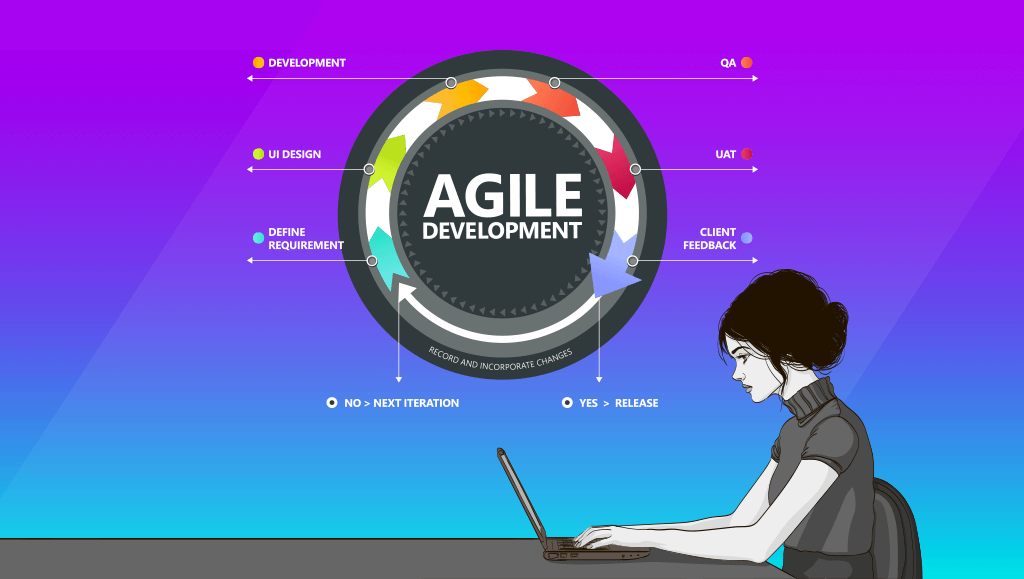

Some ask if transitioning from Agile to DevOps principles requires a new consideration of deployment and hosting infrastructure. Many have been asking the question of "what are some best practices for companies that want to address the concern of how cloud computing companies transition from Agile to DevOp principles?" In actuality Agile and DevOps can be combined versus having to choose one methodology over another.

Check out the below Q & A to learn how they these two methodologies can complement each other.

How can cloud companies best address the concerns of Agile and DevOps?

It is a misconception that Agile and DevOps principles have tension with each other. Rather, they each encompass a separate set of principles that apply to different parts of the software development life cycle.

Agile and DevOps principles can be successfully blended to ensure reliable, rapid and on-time deployments of working software.

Agile methodology stresses the immediacy of working software over comprehensive documentation, and embraces constant change pursued through short, rapid iterations of software development rather than well-defined "final" products.

DevOps, on the other hand, governs how the resulting software is tested, secured, deployed, and maintained as seamlessly as possible. DevOps is not an alternative or a response to Agile, but is best seen as a complement to Agile, which allows rapid release cycles that are secure, reliable and error-free.

What are the best practices and key advice for progressive IT teams?

Implementing DevOps often requires constructing a toolset based on cloud computing models, in that it advocates automation and repeatability of every aspect of the deployment process from the moment new code is committed to a project.

Continuous integration workflows are then constructed, using a variety of scripts and automation tools like Jenkins, which build the cloud components necessary to serve the application, configured from a central repository (using a configuration management suite like Ansible, Chef or Puppet) ensuring each deployment is identical, therefore minimizing the potential for human error.

Automated testing is another critical ingredient in the DevOps toolkit. From unit tests which confirm the functionality of individual snippets of code, to functional and integration tests, which verify that functional requirements are satisfied by the latest release without regressions.

DevOps thinking ensures that every deployment is as error-free as possible, all without the heavy workload of constant manual testing.

This focus on testing and infrastructure-as-code can align well with Agile's focus on rapid deployment in that it automates the most time-consuming portions of the software release process and allows developers to spend less time worrying about bug fixes and environmental differences, and more time implementing new features.

Simultaneously, it allows product owners to be confident in deployments, knowing that automated test cases are constantly checking for regressions, and CI/CD (continuous integration / continuous deployment) processes will reject a build that contains errors.

The fact that the cloud infrastructure is created from code at the time of the deployment is an important check that applications will function identically in test/development, staging/QA and production environments.

How can a new DevOps structure be best adapted for developers who have been using Agile for a while?

From a developer's perspective, implementing DevOps requires little change in workflow from an existing Agile mindset. The same focus on rapid deployment exists and work can be broken into Agile sprints or scrum periods according to the needs of the team and product owner.

The additional expectations that DevOps places on developers is that all committed code will be automatically tested for unit (functional and integration performance before being automatically deployed) and rejected if it does not pass all regression tests.

Thus, developers may need time to embrace a TDD (test-driven development) approach and write tests as a prerequisite to building the code that satisfies them.

Should there be an overlapping period of Agile and DevOps and if so, how long should the overlap be?

Since Agile and DevOps are not mutually exclusive, they can be blended together over time, with more DevOps thinking added onto a functioning Agile workflow to continue to narrow the gap between development and operations.

Both approaches stress communication within the team, although there are cultural disagreements about the level of specialization (Agile stresses that each member of the team be a jack-of-all-trades while DevOps tends to allow for more specialized roles such as systems architect, security expert, etc.) and the best way to schedule work: Agile favors dividing into short, rapidly repeated time chunks while DevOps focuses more on stability over the long term.

Again, these approaches are not contradictory but can be blended to ensure that software is delivered as rapidly and reliably as possible.

The level of automation involved in a DevOps workflow may be unfamiliar to developers who are new to the methodology, but it soon becomes apparent that all of the automated testing, code verification, and deployment processes can ultimately free developers up to do what they do best, which is build new features for the product owner.

What tools do you use or software solutions?

Mobomo works with both Amazon Web Services (AWS) and Microsoft Azure as our primary providers of cloud services. We utilize our deep experience in open-source technology to deliver DevOps toolsets based on Linux with Ansible code-based provisioning, and orchestration with Jenkins.

Automated functional testing is done with Selenium coupled with Gherkin-based test frameworks such as Behat and Lettuce. We rely heavily on scripting languages like Python, Ruby and Bash to connect these toolsets together and provide a robust DevOps workflow to deploy code seamlessly and reliably, on a velocity that is fully compatible with Agile best practices.

What are your yardsticks for success?

Time of release from code commit to production readiness; client acceptance of new features; number of bugs caught by automated testing frameworks for each release; number of bugs missed by automated testing and reported after the fact; total downtime, service interruptions and other SLA violations resulting from unanticipated infrastructure issues, either deployment-related or due to poor planning; etc.

How long do you pursue a DevOps strategy before calling it off as a failure and moving back to Agile? Is there no turning back?

Given that Agile and DevOps are not mutually exclusive, there is no need to "call off" a DevOps transition and go "back" to Agile. Rather, they can be combined in ways that reinforce each other, with Agile functioning as the development team's methodology while DevOps provides the backbone for the cloud deployment, security, networking and testing aspects of the engineering process. Thus, disruptions are minimized by DevOps which allow Agile to provide rapid iterations of working software.

What is DevOps?

What is DevOps?

DevOps combines cultural philosophies, practices and tools to deliver products at a high velocity. Instead of inverting the traditional functional separation between developers, QA, and operations we enable cross-functional technology teams that promote collaboration rather than tension. By promoting team collaboration it results in valuing the repeatability of processes and eliminating single points of failure. It advocates thinking of infrastructure as part of the application and allows for more rapid and reliable software release cycles.

How does Mobomo do DevOps?

Mobomo extends the same agile, iterative development model we use for design and development into our DevOps architecture services. We embrace a fully automated, repeatable, testable deployment process that allows for rapid integration of code and 100% environmental consistency, mitigating the risk of surprises once your app is in production.

Benefits of DevOps:

- Faster time-to-market due to rapid release cycles. DevOps allows multiple releases per day.

- Infrastructure can be demand-responsive and right-priced using cloud tools like auto-scaling and reserved instances.

- Full transparency into cost-awareness allows for increased operational intelligence.

- Dramatically lowers failure rate in production because testing is consistent across environments.

Processes

- Allows application of Agile/Scrum development methodologies to infrastructure.

- Continuous Integration/Delivery (CI/CD)

- Code is deployed incrementally as often as possible using a fully-automated build/test/deploy process.

- Test-Driven Development (TDD)

- Developers write automated tests first before the code to satisfy them.

- All tests are then run on each release to identify functional regressions.

- Integrates developers more fully into the QA and release process.

- Microservices and Containerization

- Each modular component of an application is designed as a standalone service, avoiding the “monolith” approach.

- Allows functionality to degrade incrementally in the event of a bug rather than the entire application failing.

- Eliminates “spaghetti code” and makes applications more maintainable and future-proof.

- Graceful Failure

- Assume that failures will happen in production, so simulate them as part of application testing.

- High Availability: Eliminate all single points of failure in infrastructure with load balancing, clustering and other tools. (Horizontal vs. vertical scaling.)

- Automate disaster recovery so that infrastructure becomes self-healing.

Tools

- Cloud-agnostic: Multiple engineers certified in Amazon Web Services; also experienced with Azure, Rackspace, and OpenStack.

- Infrastructure: CloudFormation, Terraform, Docker, Vagrant

- Provisioning: Ansible, Chef, and Puppet

- CI/CD: Jenkins, TeamCity and CircleCI

- Monitoring and Notifications: CloudAware, CloudCheckr, Nagios, DataDog

I'm a huge fan of Heroku. I mean I'm a huge fan of Heroku. Their platform is much closer to exactly how I would want things to work than I ever thought I would get. However in the past few weeks Heroku has had a number of serious outages...enough to the point where I started thinking that maybe we needed to start working out a backup plan for when our various Heroku-hosted applications were down. That's when I realized a big problem, and it's not just a problem with Heroku but with any Platform-as-a-Service:

The moment you need to have failovers or fallbacks for a PaaS app is the moment that it loses 100% of its value.

Think about it: to have a backup for a Heroku app, you're going to need to have a mirror of your application (and likely its database as well) running on separate architecture. You will then need to (in the best case) set up some kind of proxy in front of Heroku that can detect failures and automatically swap over to your backup architecture, or (in the easiest case) have the backup architecture up and ready to go and be able to flip a switch and use it.

The backup architecture is obviously going to have to be somewhere else (preferrably not on EC2) to maximize the chance that it will be up when Heroku goes down which leaves you with the glaring problem that if you have to mirror your apps architecture on another platform, all of the ease of deployment and worry-free infrastructure evaporates. This leaves you with two options:

- Put your faith in your PaaS provider and figure that they will (in general) be able to do a better job of keeping your site up than you could without hiring a team of devops engineers.

- Scrap PaaS entirely and go it on your own.

A "PaaS with fallback" simply doesn't work because it's easier to mirror your architecture across multiple platforms than you control than it is to mirror it from a managed PaaS to a platform you control.

Don't Panic

Note that I'm not telling anyone to abandon Heroku or the PaaS concept; quite the opposite. My personal decision is to take choice #1 and trust that while Heroku may have the occasional hiccup (or full-on nosedive) they are still providing high levels of uptime and a developer experience that is simply unmatched.

Heroku has done a great job of innovating the developer experience for deploying web applications, but what they need to do next is work on innovating platform architecture to be more robust and reliable than any other hosting provider. Heroku should be spread across multiple EC2 availability zones as a bare minimum and in the long run should even spill over into other cloud providers when necessary.

If they can nail reliability the way they've nailed ease-of-use even the most skeptical of developers would have to take a look. If they could say with confidence "Your app will be up even if all of EC2 is down" that's yet another powerful selling point for an already powerful system.

The Third Option

There is actually a third option: if your PaaS is available as open source then you will be able to run their architecture on someone else's systems, giving you a backup that is at least a middleground between the ease of PaaS and the reliability of Do-it-Yourself. The two current players in this arena are Cloud Foundry and OpenShift.

While Heroku currently has them beat for developer experience (in my opinion) and the addon ecosystem makes everything just oh-so-easy, it might be worth exploring these as a potential middleground. Of course, if Heroku would open source their architecture (or even a way to simply get an app configured for Heroku up and running on a third-party system with little to no hassle) that would be great as well.

In the end I remain a die-hard fan of PaaS. It's simply amazing that, merely by running a single command and pushing to a git repo, I can have a production environment for whatever I'm toying with available in seconds. After the past few weeks, however, I am spending a little more time worrying about whether those production environments will be up and running when I need them to be. And that's the problem with PaaS.

You've just written a masterpiece of a web app. It's fun, it's viral, and it's useful. It's clearly going to be "Sliced Bread 2.0". But what comes next is a series of unforeseen headaches. You'll outgrow your shared hosting and need to get on cloud services. A late night hack session will leave you sleep deprived, and you'll accidentally drop your production database instead of your staging database. Once you serve up a handful of error pages, your praise-singing users will leave you faster than it takes to start a flamewar in #offrails. But wait! Just as Ruby helped you build your killer app, Ruby can also help you manage your infrastructure as your app grows. Read on for a list of useful gems every webapp should have.

Backups

When you make a coding mistake, you can revert to a good known commit. But when disaster wrecks havoc with your data, you better have an offsite backup ready to minimize your losses. Enter the backups gem, a DSL for describing your different data stores and offsite storage locations. Once you specify what data stores you use in your application (MySQL, PostgreSQL, Mongo, Redis, and more), and where you want to store it (rsync, S3, CloudFiles), Backup will dump and store your backups. You can specify how many backups you'd like to keep in rotation, and there's various extras like gzip compression, and notifiers for when backups are created or failed to create.

Cron Jobs

Having backups configured doesn't make you any less absent minded about running your backups. The first remedy that jumps to mind is editing your crontab. But man, it's hard to remember the format on that sucker. If only there was a Ruby wrapper around cron... Fortunately there is! Thanks to the whenever gem, you can define repetitious tasks in a Ruby script.

Cloud Services

With the number of cloud services available today, it's becoming more common to have your entire infrastructure hosted in the cloud. Many of these services offer API's to help you tailor and control your environments programmatically. Having API's is great, but it's tough to keep them all in your head.

The fog gem is the one API to rule them all. It provides a consistent interface to several cloud services. There are specific adapters for each cloud service. By following the Fog interface, it makes it really easy to switch between different cloud services. Say you were using Amazon's S3, but wanted to switch to Rackspace's CloudFiles. If you use Fog, it's as simple as replacing your credentials and changing the service name. You can create real cloud servers, or create mock ones for testing. Even if you don't use any cloud services, fog has adapters for non-cloud servers and filesystems.

Exception Handling

Hoptoad is a household name in the Ruby community. It catches exceptions created by your app, and sends them into a pretty web interface and other notifications. If you can't use Hoptoad because of a firewall, check out the self-hostable Errbit.

Monitoring

When your infrastructure isn't running smoothly, it better be raising all kinds of alarms and sirens to get someone to fix it. Two popular monitoring solutions are God, and Monit. God lets you configure which services you want to monitor in Ruby, and the Monit gem gives you an interface to query services you have registered with Monit. If you have a Ruby script that you'd like to have running like a traditional Unix daemon, check out the daemons gem. It wraps around your existing Ruby script and gives you a 'start', 'stop', 'restart' command line interface that makes it easier to monitor. Don't forget to monitor your background services, it sucks to have all your users find your broken server before you do.

Staging

Your application is happily running in production, but all of a sudden, it decides to implode on itself for a specific user when they update their avatar. Try as you might, you just can't reproduce the bug locally. You could do some cowboy debugging on production, but you'll end up dropping your entire database on accident. Oops.

It's times like these that you'll be thankful you have a staging environment setup. If you use capistrano, make sure to check out how to use capistrano-ext gem, and its multi-stage deploy functionality. To reproduce your bug on the same data, you can use the taps gem to transfer your data from your production database to your staging database. If you're using Heroku then it's already built-in.

Before you start testing your mailers on staging, do all of your users a favor and install the mail_safe gem. It stubs out ActionMailer so that your users don't get your testing spam. It also lets you send emails to your own email address for testing.

CLI Tools

Thor is a good foundation for writing CLI utilities in Ruby. It has interfaces for manipulating files and directories, parsing command line options, and manipulating processes.

Deployment

Capistrano helps you deploy your application, and Chef configures and deploys your servers and services. If you use Vagrant for managing development virtual machines, you can reuse your Chef cookbooks for production.

Conclusion

All of these gems help us maintain our application infrastructure in a robust way. It frees us from running one-off scripts and hacks in production and gives us a repeatable process for managing everything our app runs on. And on top of all the awesome functionality these tools provide, we can also write Ruby to interact with them and version control them alongside our code. So for your next killer webapp, don't forget to add some killer devops to go along with it.