Transitioning from Agile to DevOps

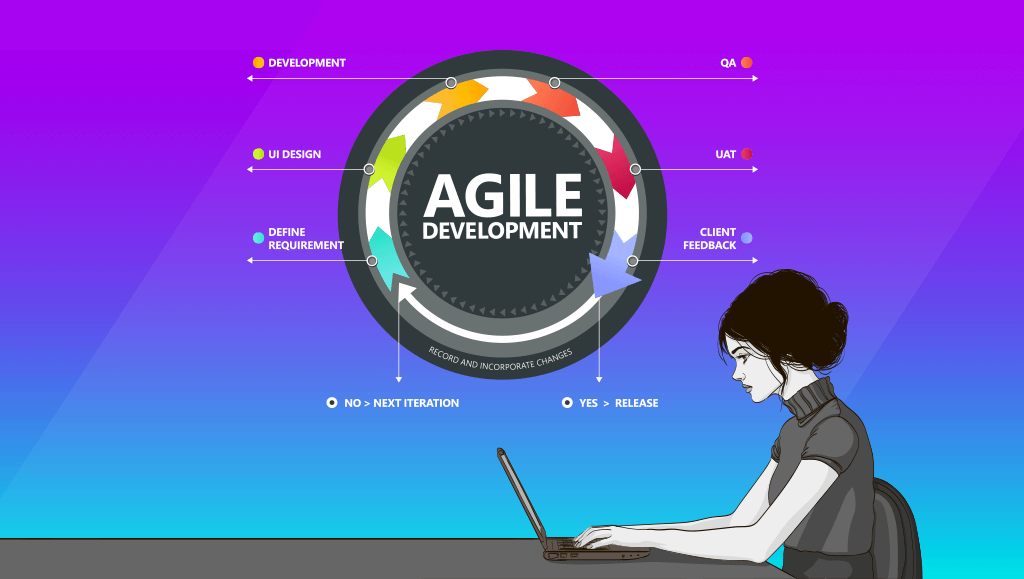

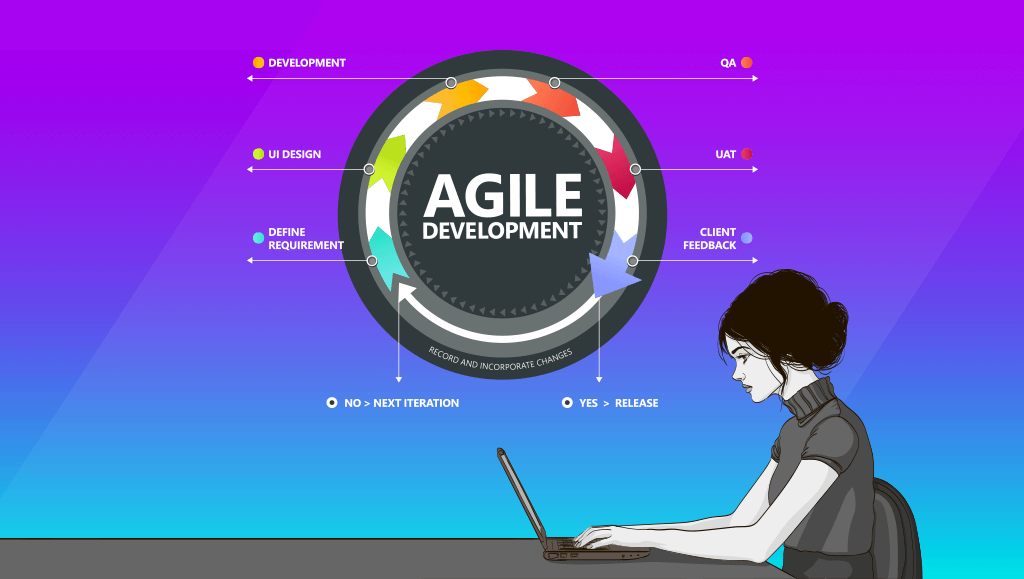

Some ask if transitioning from Agile to DevOps principles requires a new consideration of deployment and hosting infrastructure. Many have been asking the question of "what are some best practices for companies that want to address the concern of how cloud computing companies transition from Agile to DevOp principles?" In actuality Agile and DevOps can be combined versus having to choose one methodology over another.

Check out the below Q & A to learn how they these two methodologies can complement each other.

How can cloud companies best address the concerns of Agile and DevOps?

It is a misconception that Agile and DevOps principles have tension with each other. Rather, they each encompass a separate set of principles that apply to different parts of the software development life cycle.

Agile and DevOps principles can be successfully blended to ensure reliable, rapid and on-time deployments of working software.

Agile methodology stresses the immediacy of working software over comprehensive documentation, and embraces constant change pursued through short, rapid iterations of software development rather than well-defined "final" products.

DevOps, on the other hand, governs how the resulting software is tested, secured, deployed, and maintained as seamlessly as possible. DevOps is not an alternative or a response to Agile, but is best seen as a complement to Agile, which allows rapid release cycles that are secure, reliable and error-free.

What are the best practices and key advice for progressive IT teams?

Implementing DevOps often requires constructing a toolset based on cloud computing models, in that it advocates automation and repeatability of every aspect of the deployment process from the moment new code is committed to a project.

Continuous integration workflows are then constructed, using a variety of scripts and automation tools like Jenkins, which build the cloud components necessary to serve the application, configured from a central repository (using a configuration management suite like Ansible, Chef or Puppet) ensuring each deployment is identical, therefore minimizing the potential for human error.

Automated testing is another critical ingredient in the DevOps toolkit. From unit tests which confirm the functionality of individual snippets of code, to functional and integration tests, which verify that functional requirements are satisfied by the latest release without regressions.

DevOps thinking ensures that every deployment is as error-free as possible, all without the heavy workload of constant manual testing.

This focus on testing and infrastructure-as-code can align well with Agile's focus on rapid deployment in that it automates the most time-consuming portions of the software release process and allows developers to spend less time worrying about bug fixes and environmental differences, and more time implementing new features.

Simultaneously, it allows product owners to be confident in deployments, knowing that automated test cases are constantly checking for regressions, and CI/CD (continuous integration / continuous deployment) processes will reject a build that contains errors.

The fact that the cloud infrastructure is created from code at the time of the deployment is an important check that applications will function identically in test/development, staging/QA and production environments.

How can a new DevOps structure be best adapted for developers who have been using Agile for a while?

From a developer's perspective, implementing DevOps requires little change in workflow from an existing Agile mindset. The same focus on rapid deployment exists and work can be broken into Agile sprints or scrum periods according to the needs of the team and product owner.

The additional expectations that DevOps places on developers is that all committed code will be automatically tested for unit (functional and integration performance before being automatically deployed) and rejected if it does not pass all regression tests.

Thus, developers may need time to embrace a TDD (test-driven development) approach and write tests as a prerequisite to building the code that satisfies them.

Should there be an overlapping period of Agile and DevOps and if so, how long should the overlap be?

Since Agile and DevOps are not mutually exclusive, they can be blended together over time, with more DevOps thinking added onto a functioning Agile workflow to continue to narrow the gap between development and operations.

Both approaches stress communication within the team, although there are cultural disagreements about the level of specialization (Agile stresses that each member of the team be a jack-of-all-trades while DevOps tends to allow for more specialized roles such as systems architect, security expert, etc.) and the best way to schedule work: Agile favors dividing into short, rapidly repeated time chunks while DevOps focuses more on stability over the long term.

Again, these approaches are not contradictory but can be blended to ensure that software is delivered as rapidly and reliably as possible.

The level of automation involved in a DevOps workflow may be unfamiliar to developers who are new to the methodology, but it soon becomes apparent that all of the automated testing, code verification, and deployment processes can ultimately free developers up to do what they do best, which is build new features for the product owner.

What tools do you use or software solutions?

Mobomo works with both Amazon Web Services (AWS) and Microsoft Azure as our primary providers of cloud services. We utilize our deep experience in open-source technology to deliver DevOps toolsets based on Linux with Ansible code-based provisioning, and orchestration with Jenkins.

Automated functional testing is done with Selenium coupled with Gherkin-based test frameworks such as Behat and Lettuce. We rely heavily on scripting languages like Python, Ruby and Bash to connect these toolsets together and provide a robust DevOps workflow to deploy code seamlessly and reliably, on a velocity that is fully compatible with Agile best practices.

What are your yardsticks for success?

Time of release from code commit to production readiness; client acceptance of new features; number of bugs caught by automated testing frameworks for each release; number of bugs missed by automated testing and reported after the fact; total downtime, service interruptions and other SLA violations resulting from unanticipated infrastructure issues, either deployment-related or due to poor planning; etc.

How long do you pursue a DevOps strategy before calling it off as a failure and moving back to Agile? Is there no turning back?

Given that Agile and DevOps are not mutually exclusive, there is no need to "call off" a DevOps transition and go "back" to Agile. Rather, they can be combined in ways that reinforce each other, with Agile functioning as the development team's methodology while DevOps provides the backbone for the cloud deployment, security, networking and testing aspects of the engineering process. Thus, disruptions are minimized by DevOps which allow Agile to provide rapid iterations of working software.

There are so many benefits of migrating to the cloud, many want to reduce cost while others want to improve their efficiencies. We get a lot of questions surrounding cloud migration so we decided to do a short Q & A - we know there are tons of questions that could be answered but this was our shortlist.

There are so many benefits of migrating to the cloud, many want to reduce cost while others want to improve their efficiencies. We get a lot of questions surrounding cloud migration so we decided to do a short Q & A - we know there are tons of questions that could be answered but this was our shortlist.

Earlier this month, Apple held its September event and announced some exciting things involving releases of their new software and hardware! We knew the iOS 11 update was going to launch September 19th but what has the software update meant for apps in the App Store? According to Tech Insider, more than 180,000 iPhone apps are not compatible with the iOS 11 update and it is possible that Apple will stop supporting up to 200,000 apps. If your company has an app that is currently in the App Store -

Earlier this month, Apple held its September event and announced some exciting things involving releases of their new software and hardware! We knew the iOS 11 update was going to launch September 19th but what has the software update meant for apps in the App Store? According to Tech Insider, more than 180,000 iPhone apps are not compatible with the iOS 11 update and it is possible that Apple will stop supporting up to 200,000 apps. If your company has an app that is currently in the App Store -